Data racism: a new frontier

Perhaps without even noticing, you have read about data racism several times in the news in the past months. What is it? This blog seeks to explain – in the context of an emerging strand of work at the European Network Against Racism exploring racism in the digital space.

The growth of AI and other data-driven sorting systems are often pigeonholed as a ‘US issue’. Quite the opposite, the use of data-driven technology (including Artificial Intelligence, automated decision making systems, algorithmic decision making, the merging of large datasets with personal information, and good old fashioned social media scraping and surveillance) is increasingly unveiled throughout Europe. It is very much a European reality.

What is less explored is how such technologies discriminate. The flip side to the ‘innovation’ and enhanced ‘efficiency’ of automated technologies is how they in effect differentiate, target and experiment on communities at the margins – racialised people, undocumented migrants, queer communities, and those with disabilities.

Automated or data-driven decision-making tools are increasingly deployed in numerous areas of public life, which inherently affect people of colour more. Increasingly, we witness this experimentation on marginalised communities in policing, counter terrorism and migration control functions.

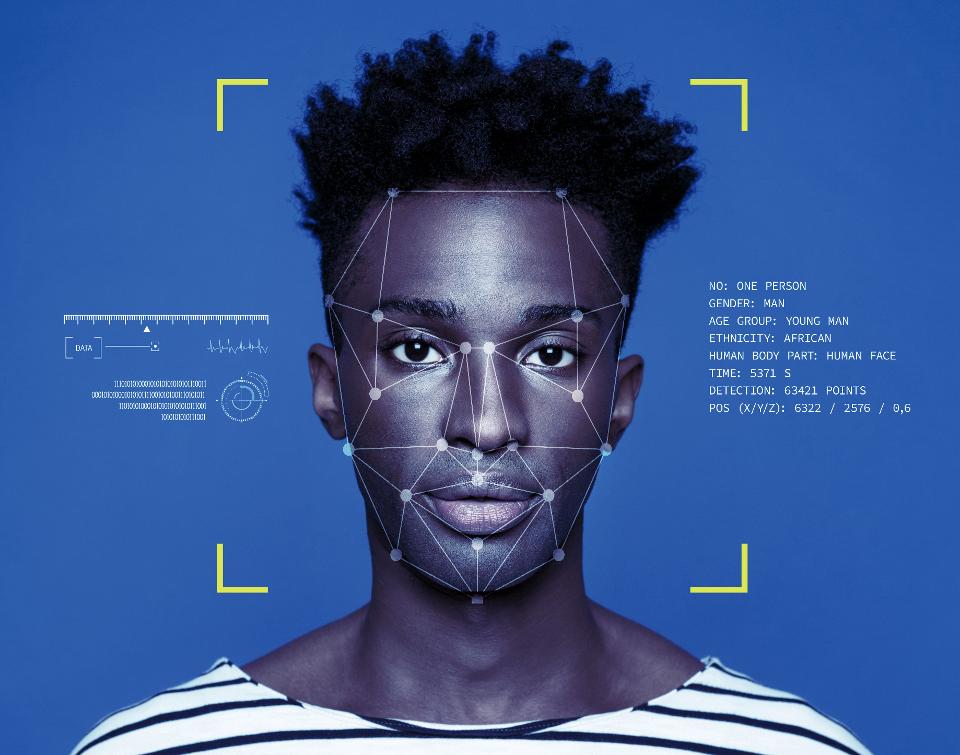

In ENAR’s recent study Data-driven policing: the hardwiring of discriminatory policing practices across Europe, Dr Patrick Williams and Eric Kind highlight the range of data based techniques deployed by police forces across Europe with potential discriminatory impact on racialised communities. From the increased resort to facial recognition tools in crime investigation, despite evidence that they misidentify people of colour (in particular women), to older tech such as automated number plate recognition, which has been used to track and discriminate against Roma and Traveller communities, we see that the application of technologies is only exacerbating trends of over-policing and under-protection.

Person-based ‘predictive’ policing systems are increasingly to be trialled and implemented by a number of police forces, attempting to code or predict the risk of certain people committing crimes, such as the UK’s Gangs Matrix or the Netherlands’ top 600 and top 400, also known as Pro-Kid [[Jansen, F. (2018). Working Paper: Data-driven policing in the context of Europe. Available at: https://datajusticeproject.net/wp-content/uploads/sites/30/2019/05/Report-Data-Driven-Policing-EU.pdf.]]. The latter system attempts to infer how likely certain children under 12 are to become future criminals. Who is the algorithm more likely to place on these databases? Overwhelmingly black and brown men and boys.

Another type of risk modelling is place based, attempting to predict future levels of crime based on a range of data including socio-economic data and crime statistics, such as Amsterdam’s Crime Anticipation system. Not only is the normalisation of pre-criminality interactions hugely problematic for the presumption of innocence, as with generalised prejudge and suspicions of criminality, they focus on the black and brown people in economically marginalised areas.

The recent legal challenge from the Public Interest Litigation Project (PILP) in the Netherlands, against the System Risk Indication (SyRI) system, highlights the dangers of such practices in terms of class, race and migration, often discussed as the ‘digital welfare state’. The PILP successfully argued that SyRI – in attempting to predict risk of fraudulent behaviour in benefits – breaches human rights. The court said that the system may also amount to discrimination on the basis of socio-economic and migrant status (the system was primarily deployed in low-income areas, disproportionately inhabited by ‘non-Western migrants’).

There is also a need to review how authorities use such technologies in migration control. At the border, programmes such as iBorderCtrl use facial recognition to somehow assess whether those seeking entry to Europe are telling the truth. Posing huge questions for racial biases and discrimination, rights to privacy and the processing of personal data, Fieke Jansen and Daniel Leufer are right to seek answers from the European Commission as the main funder of iBorderCtrl.

Such potential breaches of fundamental rights have to be seen in a wider context in which migrants, and particularly those without documents, already face much wider breaches of privacy as a result of the construction of massive data-sharing systems such as the Common Identity Repository, as outlined by the Platform for International Cooperation on Undocumented Migrants.

We see discrimination also in the ways large social media platforms facilitate racism and other forms of abuse online, as an inherent feature of advertisement-based business models. This also rings true in the sphere of online knowledge production. When Google’s search functions return searches for ‘black girls’ with primarily pornography sites [[Noble, S.U. (2018). Algorithms of oppression: how search engines reinforce racism. NYU Press.]], we see that such companies will necessarily reinforce misogyny and racism.

Data racism: ‘coded bias, imagined objectivity’

There are more examples of existing or potential discrimination than possible to name. However, viewing these trends holistically, patterns emerge and the concept of ‘data racism’ starts to form. ‘Data racism’ encompasses the multiple systems and technologies – deployed in a range of fields – that either primarily target or disproportionately impact migrants and people of colour.

This disproportionality must be seen in a wider context of structural racism – a reality of existing historical injustices, persistent inequalities aligned with race and ethnicity in areas of housing, healthcare, employment and education, and repeated experiences of state violence and impunity.

The use of systems to profile, to surveil and to provide a logic to discrimination is not new. What is new is the sense of neutrality afforded to discrimination delivered through technology. As Ruha Benjamin explains in Race After Technology Abolitionist Tools for the New Jim Code[[Benjamin, R. (2019). Race After Technology: Abolitionist tools for the New Jim Code. Polity.]] ‘this combination of coded bias and imagined objectivity’ is what sets these trends apart from discrimination of other eras.

The use of ‘objective’ scientific methods to differentiate and ‘risk-score’ individual and communities for the purposes of exclusion has often escaped scrutiny – due to the basic but oft-successful argumentation that technologies are ‘not subject to classic racism’, because ‘the computer has no soul and therefore does not have the human defect of classifying persons according to skin colour’ [[Bigo, D. (2007). ‘Detention of Foreigners, States of Exception, and the Social Practices of the Banopticon, in P.K. Rajuram and C. Grundy-Warr (eds.), Borderscapes: Hidden Geographies and Politics at Territory’s Edge, Minneapolis: University of Minnesota Press, pp. 3-33.]]

The tech sector and computer scientist community necessarily implant their worldview (including their inherent biases) into the models they build – and there has been a huge spotlight on the lack of diversity in this sector – hence the phrase ‘technology is never neutral’.

‘Data racism’ encompasses the multiple systems and technologies – deployed in a range of fields – that either primarily target or disproportionately impact migrants and people of colour.

Anti-racism and tech – what’s next?

It is clear that the algorithm is not on our side. In most cases, these systems are designed and deployed without sufficient testing for human rights or non-discrimination compliance. And like all discrimination, there are real consequences on peoples’ lives. The treatment rolled out is likely to be different than those who are classified as white, with ‘origins’ in Europe, as male, as gender conforming, as able bodied, as straight.

Blasting the myth of ‘neutral technology’ is imperative for the anti-racist community. We also need to know more about who is deploying this tech and where, how it is impacting our communities, and how best to combat these harms.

First, it is vital that the anti-racist community – in particular the people of colour, women* and queer people doing this work – incorporate digital security, privacy and safety concerns into their strategies.

We must also reassess our strategies for combatting racism – although we are still mastering the techniques with which we contest over-policing, brutality and racial profiling. We must now contend with another challenge. When law enforcement resorts to new technology to aid their practice, we find ourselves at further risk. Not only must we consider our physical safety in our relations with the authorities, we also need to be informed about the security of our data.

The next step is to reach out to other communities. We need to build bridges with those fighting for the protection of our personal data and privacy. We also need to make use of the knowledge and tools of lawyers developing strategies to confront big tech and state surveillance, and ensure discrimination is on the agenda too.

Who are the decision-makers and what do we want from them?

It’s also important to recognise that we now live in a world in which, particularly in terms of the digital space, the main decision-makers are not only traditional ‘policymakers’ – elected politicians, the bureaucrats, the courts – but rather tech company executives, and the computer scientists building the models that will allocate resources and deliver services.

The homogeneity and lack of diversity in the tech sector is fundamentally shaping the way technology is applied in all areas of our lives. In her article ‘All the Digital Humanists are White, All the Nerds are Men, but some of us are Brave’, Moya Z. Bailey outlines the need for something more substantive than the ‘“add and stir” model of diversity’ if we are to unsettle the ‘structural parameters that are set up when a homogenous group has been as the centre and don’t automatically engender understanding across forms of difference’. As in all areas of life, structural approaches which seek to dismantle inequalities and break down privileges in classification are necessary in the tech field.

However, we also need an informed and democratic debate about how technology is encroaching on our rights, and for that conversation to put principles of equality and anti-discrimination at the centre. The responses to discrimination in tech have overwhelmingly been about reform: fairness and ethics, training and diversity. What these approaches lack are understandings of structural oppressions, human rights, and actually, which parts are not appropriate for reform?

At this years’ Fairness Accountability and Transparency Conference, mainly aimed at a computer science audience Nani Jansen Reventlow, Director of the Digital Freedom Fund said that:

“When looking at possible litigation objectives for algorithmic decision making, we can make a distinction between two main categories: regulation (or: how do we make the use of algorithmic decision-making fair, accountable and transparent) and drawing so-called “red lines” (or: should we be using AI at all).”

We can’t skip over the conversation about red lines – which developments in tech are we simply not willing to accept? I think that discrimination should be one of those red lines. The systems deployed to make our lives better should be accountable to make our lives better – and that should be the case regardless of how much money we have, compliance with gender norms, where we come from or the colour of our skin.

Sarah Chander is Senior Advocacy Officer at the European Network Against Racism (ENAR).